Our MCP Gateway Saves You Money and Tokens Automatically

ContextBridge launches the first intelligent MCP gateway - saving you money and tokens while improving tool selection and security

MCP Explosion

Model Context Protocol (MCP) has exploded in popularity in the last year and is now a key part of the AI tooling ecosystem. Tons of developer and even some technically minded non-developers are connecting MCP servers to their coding and chat clients and agents alike providing them with access to tools and resources not otherwise available to them.

Wasting Tokens (and money) without knowing it

MCP is great because it makes it easy to connect LLMs to additional tools and resources. Unfortunatly, the more MCP servers you connect to you client, the more tokens eat up your context window. When a MCP client like a LLM, agent, or chat client is connected to a MCP server, it automatically adds all the tool definitions for that server to your context window. This can easily result in tens of thousands of tokens per request loaded into your context window before you even start your first request. Usually most of these tool definitions are not needed for your specific task and are just wasting tokens which costs you money, causes you to max out on your time with better models, and lowers the accuracy of tool usage and therefore the accuracy of your agent’s responses.

How ContextBridge Saves You Tokens (and money)

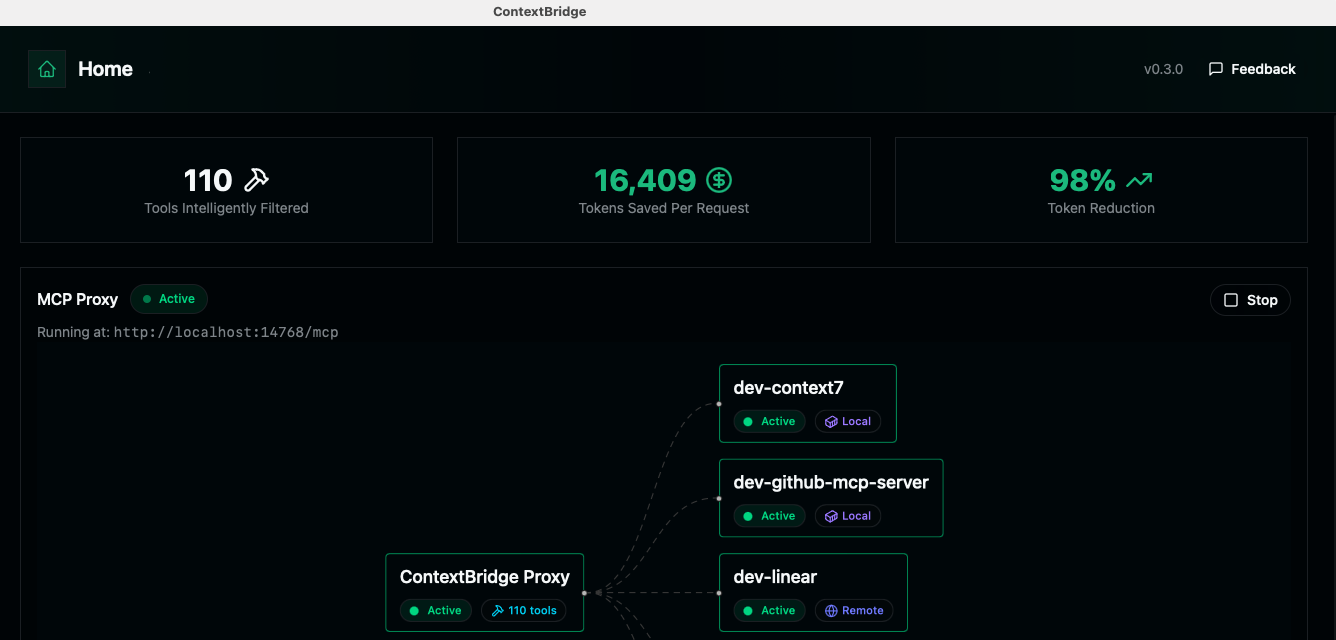

ContextBridge is an MCP gateway that reduces token waste by 90%+ while improving tool selection, performance, and security. Instead of adding all the MCP server and their tool definitions to your context window, ContextBridge allows your LLM to find the right tool based on the task at hand. So instead of loading up your context window with tens of thousands of tokens for tools you may or may not need, ContextBridge puts a few hundred tokens in your context window and helps your LLM find the right tool for the task at hand.

Using ContextBridge

ContextBridge can be downloaded for free as a desktop app for individual users. For, Teams and organizations that want to use ContextBridge, we offer cloud and on-premises deployment options with additional features like SSO integration, custom MCP catalogs, observability hooks, and more.

Beyond saving tokens: improved tool selection and security

Beyond saving tokens, ContextBridge also improves tool selection and security of your MCP infrastructure.

Regarding tool selection, the more tools you provide to your LLM, the worse tool selection becomes because the LLM has trouble selecting the right tool. This is a classic example of “needle in a haystack” problem. By only exposing the tools your LLM needs to complete the task at hand, ContextBridge can greatly improve tool selection.

A large number of MCP servers are run as npm and uv commands directly on the user’s machine with zero sandboxing. This is a huge security risk and opens up your machine to all kinds of attacks. ContextBridge runs MCP in a secure and isolated environment (Docker) which provide a higher level of security and provides opportunities to further monitor, audit, and secure your MCP infrastructure.

Try ContextBridge Today

We’re opening up ContextBridge to the public today. Any user can download the desktop app for free from the ContextBridge website and start saving tokens immediately.

For teams and organizations, we’re offering free limited trials for up to 25 users. Contact us at hello@contextbridge.ai to find out more or schedule a meeting with our team.

Stay connected

Follow us on X/Twitter, Youtube, and Bluesky to stay up to date on the latest news and updates.